TrademarkNow Is Now a Part of Corsearch

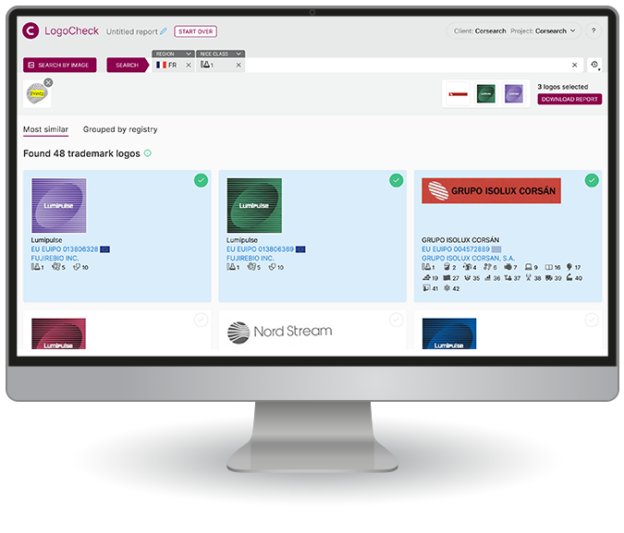

Corsearch is excited to offer trademark and IP professionals the TrademarkNow product range. We have retained all of the technology and innovation that makes this suite of DIY trademark tools so intuitive and easy to use, meaning you have access to all of their great features and benefits.

See our solutions Request a demoCorsearch is trusted by 5000+ customers worldwide

Why Use Our Self-Service Trademark Tools

Today there are millions of brands in use globally. This growth makes all trademark processes complex and challenging for IP professionals today. Our intuitive and flexible DIY TrademarkNow tools enable you to fast track your trademark management tasks and get you results faster than ever before.

9.8Million

watch notices were delivered to clients by Corsearch in 2022

9.6Million

new marks were added to our database in 2022

$5Trillion

worth of annual revenue is protected and commercialized by Corsearch

70%

of Forbes top 100 put their trust in Corsearch’s trademark solutions

Key Benefits

Fully Supported Self-Service Tools

Once onboard, our highly trained Customer Success team will work with you to understand your requirements and then support you. Our TrademarkNow Platform also gives you an ecosystem to collaborate, review, and customize reports to suit your needs.

This combination of flexible self-service tools with accurate, relevant data has made Corsearch a trusted partner for thousands of brands and IP practitioners all over the world.

What people are saying about us

Request a Demo

Our experienced team is here to support you with information and advice on how our products can meet your needs.

Request a demoCorsearch Search and Watch Solutions

Our suite of TrademarkNow tools complement our extensive range of trademark solutions and services. We cater for all national and international projects with our full legal and regulatory screening tools: Screening and POCA Online, CORSEARCH Pharma-Check — as well as our expert-led Search and Watch solutions.

Learn more about our trademark products